Fast-Forward Video Based on Semantic Extraction

2016 IEEE International Conference on Image Processing (ICIP)

Abstract

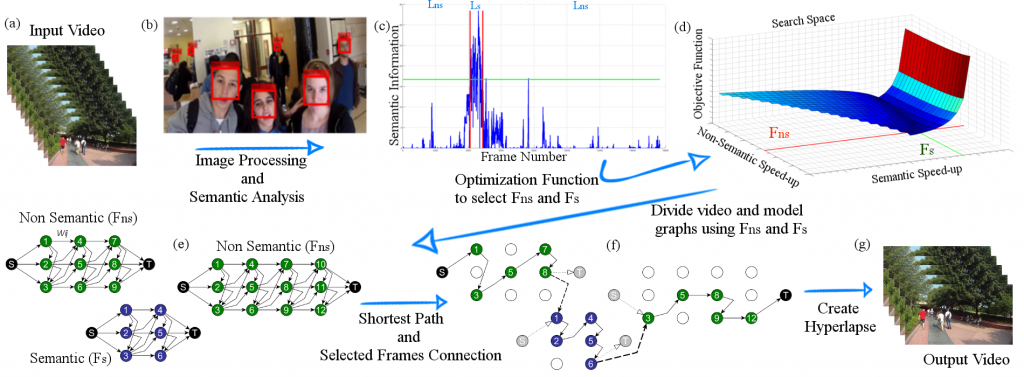

Thanks to the low operational cost and large storage capacity of smartphones and wearable devices, people are recording many hours of daily activities, sport actions and home videos. These videos, also known as egocentric videos, are generally long-running streams with unedited content, which make them boring and visually unpalatable, bringing up the challenge to make egocentric videos more appealing. In this work, we propose a novel methodology to compose the new fast-forward video by selecting frames based on semantic information extracted from images. The experiments show that our approach outperforms the state-of-the-art as far as semantic information is concerned and that it is also able to produce videos that are more pleasant to be watched.

Keywords: Semantic Information, First-person Video, Fast-Forward, Video Sampling, Video Segmentation

|

|

Methodology and Results. |

Citation

@InProceedings{Ramos2016,

author = {W. L. S. Ramos and M. M. Silva and M. F. M. Campos and E. R. Nascimento},

booktitle = {IEEE International Conference on Image Processing (ICIP)},

title = {Fast-forward video based on semantic extraction},

year = {2016},

month = {Sep.},

address = {Phoenix, USA},

pages = {3334-3338},

doi = {10.1109/ICIP.2016.7532977}

}

author = {W. L. S. Ramos and M. M. Silva and M. F. M. Campos and E. R. Nascimento},

booktitle = {IEEE International Conference on Image Processing (ICIP)},

title = {Fast-forward video based on semantic extraction},

year = {2016},

month = {Sep.},

address = {Phoenix, USA},

pages = {3334-3338},

doi = {10.1109/ICIP.2016.7532977}

}

Baselines

We compare this proposed methodology against the following methods:

- EgoSampling – Poleg et al., Egosampling: Fast-forward and stereo for egocentric videos, CVPR 2015.

- Microsoft Hyperlapse – Joshi et al., Real-time hyperlapse creation via optimal frame selection, ACM. Trans. Graph. 2015.

Datasets

We conducted the experimental evaluation using the datasets:

- EgoSequences – Poleg et al., Egosampling: Fast-forward and stereo for egocentric videos, CVPR 2015.

Authors

Washington Luis de Souza Ramos

PhD Candidate

Michel Melo da Silva

Researcher

Mario F. M. Campos

Professor